Technology is gaining ground daily and transforming both our personal and professional life. Additionally, the market for cloud computing is expanding more quickly. The cloud computing industry is experiencing a number of exciting advances. Both the new and established corporate…

Evolution of data center infrastructure in india

Fast Sovereign cloud adoption, fintech innovations, and the government’s adamant support for data sovereignty in India are all contributing to India’s digital economy’s unprecedented growth. India’s sovereign cloud infrastructure, which guarantees that sensitive data, whether it be financial, governmental, or citizen-related, stays inside Indian borders and is subject to Indian jurisdiction, is at the center of this change.

Businesses must quickly transition to secure, compliant infrastructures, as highlighted by the recent Digital Personal Data Protection (DPDP) Act, RBI guidelines, and sector-specific regulations. Tier-III data centers are becoming the foundation of this independent cloud shift as data volumes soar.

Growth Trends and Market Drivers

Today India’s data center market is projected to cross 77% IT load capacity by 2027, fuelled by hyperscale expansions, government incentives, and rising enterprise workloads. Organizations are increasingly turning to enterprise colocation in India for scalable and compliant infrastructure. Colocation not only reduces capital expenditure but also provides enterprises with resilient hosting environments in certified facilities.

Regional Expansion: Rise of Tier-II and Tier-III Cities

Initially concentrated in Mumbai and Delhi NCR, India’s data center footprint is expanding rapidly into Pune, Jaipur, Bhubaneshwar, and Coimbatore. Factors such as affordable land, renewable energy availability, and improved Fiber connectivity are making Tier-II & III cities new digital hubs. This regional spread is vital for achieving both data residency in India requirements and wider accessibility for enterprises nationwide.

Understanding Tier-III + Data centers

Tier Classification Explained

Tier classifications, defined by the uptime institute, measure reliability and redundancy. Tier-III data centers offer:

- 99.95% uptime

- N+1 redundancy for power and cooling

- Concurrent maintainability without downtime

Tier-IV data centers add fault tolerance and higher redundancy. Together, Tier-III+ facilities form the optimal balance of cost, reliability, and resilience required for sovereign workloads.

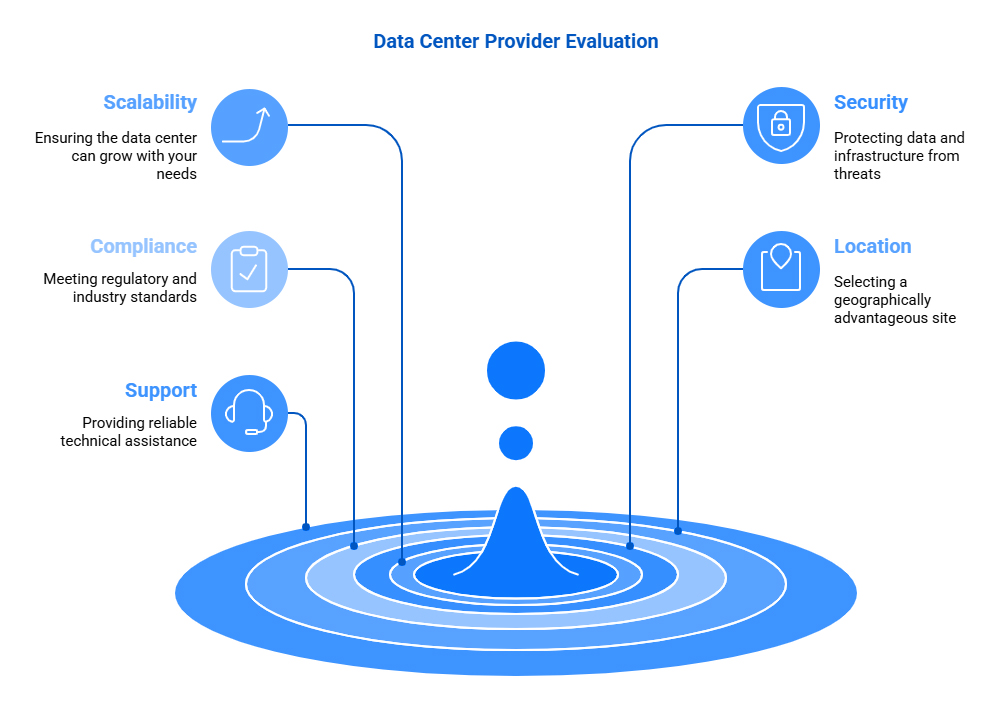

Why Does Tier-III+ Matter for Sovereign Cloud Adoption?

Indian sovereign cloud infrastructure relies on Tier-III+ facilities because they ensure:

- High Availability: Essential for BFSI, healthcare, and public services.

- Regulatory Compliance: Supports local data residency and audit trails.

- Security: Advanced surveillance, intrusion detection, and HSM-based key management.

- Scalability: Ability to host AI, IoT, and big data workloads.

E.g., many national payment systems and public digital goods rely on Tier-III+ colocation spaces for uninterrupted services.

Enabling India Sovereign Cloud Infrastructure

Regulatory Compliance and Data Residency

The Digital Personal Data Protection Act, RBI’s localization mandates, and sectoral frameworks in BFSI and government services make India’s sovereign cloud infra indispensable. Tier-III+ data centers enable enterprises to comply with these laws by ensuring data residency in India—critical workloads and personal data remain within Indian jurisdiction.

Enterprise Colocation: Meeting Performance and Control Needs

Large enterprises and public sector institutions are increasingly choosing enterprise colocation in India to balance cost, performance, and sovereignty. Through data center colocation services, enterprises get:

- Customizable infrastructure with direct cloud connectivity

- Enhance security controls

- Low-latency access to India’s growing digital ecosystem

This model supports banks, healthcare providers, and even AI-driven enterprises that cannot risk downtime and non-compliance issues.

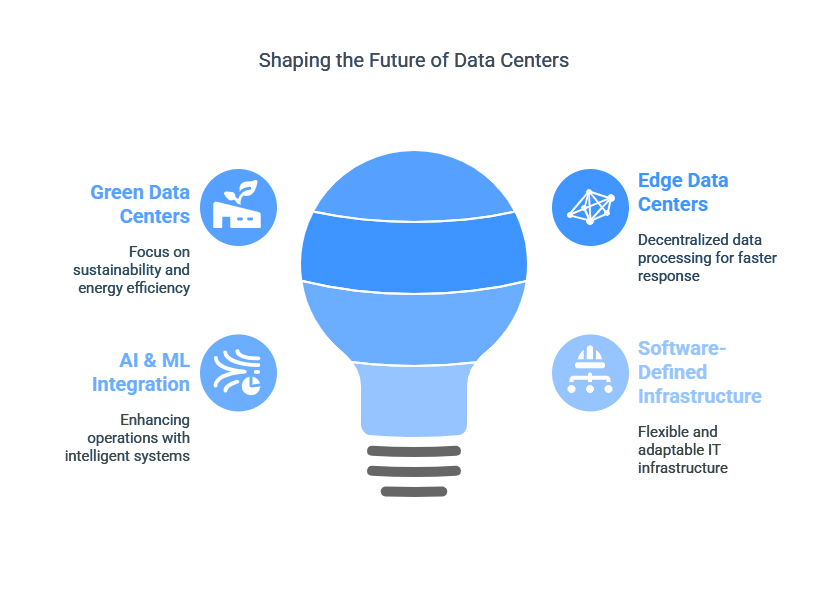

Security, Sustainability, and Future Trends

Modern Tier-III+ facilities focus on three pillars: –

- Security: Layered defense with biometric access, air-gapped recovery zones, and compliance certifications (ISO, PCI-DSS).

- Sustainability: Adoption of green power sources, modular cooling, and PUE (Power Usage Effectiveness) optimization.

- Future Readiness: Integration of AI for predictive monitoring and edge deployments to bring sovereign cloud closer to end-users.

Challenges and The Road Ahead

While Tier-III+ data centers are expanding, challenges persist:

- High Capex: Building large-scale facilities requires billions in investments.

- Skills Gap: Limited availability of skilled professionals in advanced facility management.

- Energy Use: Balancing digital growth with sustainability goals.

India’s Vision: A Federated and AI-Driven Sovereign Cloud

The next decade will witness India’s shift toward federated sovereign clouds, enabling interoperability across government, BFSI, and private enterprises. AI-native data centers will power real-time decision-making, while digital public goods like UPI and ONDC will continue driving demand for sovereign-ready, Tier-III+ infrastructures.

ESDS Sovereign Cloud: Leading the Way

At the forefront of this journey is ESDS Sovereign Cloud, purpose-built for India’s regulatory and digital landscape. ESDS delivers:

- Each of the data centers has been granted “Tier-III” status by either QSA International Limited or EPI Certification Pte Ltd. and is located in close proximity to major IT and enterprise hubs.

- Community Cloud models tailored for BFSI, government, and enterprises.

- End-to-End compliance with DPDP Act, RBI, MeitY, CERT-in audit and others mandates

- Integrated colocation and cloud hosting services with unmatched uptime & green energy commitments.

- ESDS data centers guarantee uptime of at least 99.95%, supported by power redundancy services, and are backed up with disaster recovery services and supported by a 24/7 services team.

By combining sovereign control with hyperscale-grade performance, ESDS enables enterprises and governments to accelerate digital transformation without compromising sovereignty or compliance.

Frequently Asked Questions (FAQs)

- What is a Sovereign Cloud?

A sovereign cloud ensures all sensitive data stays within India’s borders under national jurisdiction.

- Why are Tier-III data centers crucial for Sovereign Cloud adoption?

They provide 99.95% uptime, N+1 redundancy, and compliance support for secure, always-on operations.

- What makes ESDS Sovereign Cloud unique?

It’s purpose-built for India’s regulatory ecosystem, offering Tier-III certified, compliant, and sustainable cloud solutions.

- How does ESDS ensure data security and compliance?

Through ISO, PCI-DSS, and MeitY-certified facilities with advanced encryption.

- How does the DPDP Act influence cloud adoption in India?

It mandates data localization, driving organizations towards compliant, India-based cloud infrastructures.

Conclusion

India’s sovereign digital future depends on resilient and compliant infrastructure. With the rise of India’s sovereign cloud infrastructure, Tier-III data centers have become central to enabling secure, scalable, and regulation-ready services.

As enterprises adopt enterprise colocation, With India supported by providers like ESDS Sovereign Cloud, the country moves closer to a federated, sustainable, and AI-driven digital ecosystem. Tier-III+ facilities are no longer just technical assets—they are strategic enablers of India’s ambition for data sovereignty and digital self-reliance.

Recent Comments